Simon

Senior Member

- Messages

- 3,789

- Location

- Monmouth, UK

Let’s think about cognitive bias : Nature News & Comment

Nature's latest on problems in research focus on the problem of being human.

There follows a series of recommendations, including crowdsourcing analysis (one dataset, lots of different teams, which produces more accurate, more nuanced and less biased results); blind analysis (prize to anyone who can explain this in lay terms) and preregistering analyis plans [and sticking to them!]. Worth a read.

There are several related articles:

The editorial points to the big data fields of genomics and proteomics that got their act together after early work 'was plagued by false positives':

Added: couple of interesting points from the crowdfunding analysis paper, which looks at what happened when 29 different teams analysed the same dataset: after discusssion, most of the approaches were deemed valid, though they produced a range of answers:

Crowdsourcing research can reveal how conclusions are contingent on analytical choices.

Under the current system, strong storylines win out over messy results. Worse, once a finding has been published in a journal, it becomes difficult to challenge. Ideas become entrenched too quickly, and uprooting them is more disruptive than it ought to be. The crowdsourcing approach gives space to dissenting opinions.

broadcaster Jon Ronson said said:Ever since I first learned about confirmation bias I’ve been seeing it everywhere.

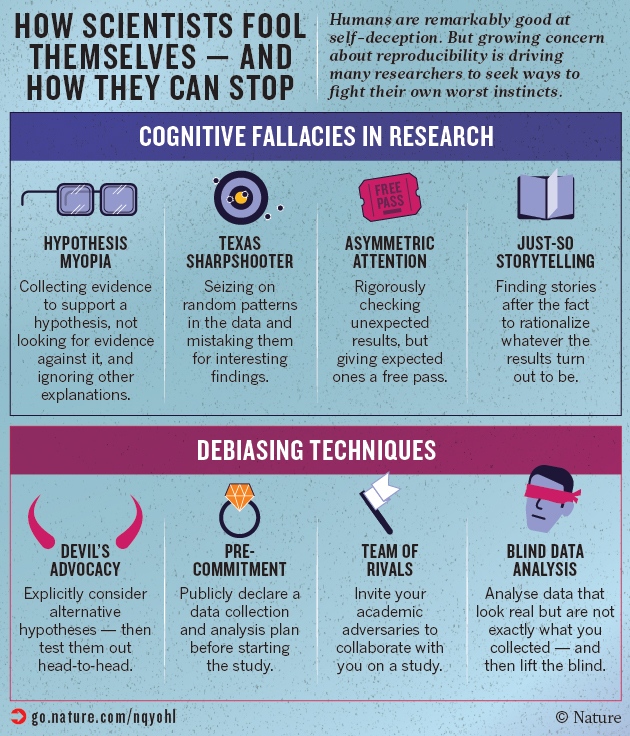

Nature's latest on problems in research focus on the problem of being human.

One enemy of robust science is our humanity — our appetite for being right, and our tendency to find patterns in noise, to see supporting evidence for what we already believe is true, and to ignore the facts that do not fit.

There follows a series of recommendations, including crowdsourcing analysis (one dataset, lots of different teams, which produces more accurate, more nuanced and less biased results); blind analysis (prize to anyone who can explain this in lay terms) and preregistering analyis plans [and sticking to them!]. Worth a read.

There are several related articles:

- How scientists fool themselves – and how they can stop : Nature News & Comment

- Crowdsourced research: Many hands make tight work : Nature News & Comment

- Blind analysis: Hide results to seek the truth : Nature News & Comment

The editorial points to the big data fields of genomics and proteomics that got their act together after early work 'was plagued by false positives':

That need is particularly acute in statistical data analysis, where some of the best-established methods were developed in a time before data sets were measured in terabytes, and where choices between techniques offer abundant opportunity for errors. Proteomics and genomics, for example, crunch millions of data points at once, over thousands of gene or protein variants. Early work was plagued by false positives, before the spread of techniques that could account for the myriad hypotheses that such a data-rich environment could generate.

...Finding the best ways to keep scientists from fooling themselves has so far been mainly an art form and an ideal. The time has come to make it a science. We need to see it everywhere.

Added: couple of interesting points from the crowdfunding analysis paper, which looks at what happened when 29 different teams analysed the same dataset: after discusssion, most of the approaches were deemed valid, though they produced a range of answers:

Crowdsourcing research can reveal how conclusions are contingent on analytical choices.

Under the current system, strong storylines win out over messy results. Worse, once a finding has been published in a journal, it becomes difficult to challenge. Ideas become entrenched too quickly, and uprooting them is more disruptive than it ought to be. The crowdsourcing approach gives space to dissenting opinions.

Last edited: